19 May 2020

I often find myself wanting lldb to evaluate an Objective-C expression when the current language is Swift.

I’ve drastically improved my debugging experience by adding a couple of command aliases to make this a quick thing to do.

In my ~/.lldbinit I’ve added the following:

command alias set-lang-objc settings set target.language objective-c

command alias set-lang-swift settings set target.language Swift

Why?

Here’s a couple concrete examples of how changing language can make things simpler

Calling private selectors from Swift

Calling non public selectors is a pain in Swift.

A few selectors I commonly want to use are:

+[UIViewController _printHierarchy]

-[UIView recursiveDescription]

-[UIView _parentDescription]

-[NSObject _ivarDescription]

In order to call these in Swift I have to use value(forKey:) - this is more characters to type and includes speech marks, which my fingers are generally slightly slower and more error prone at locating.

po UIViewController.value(forKey: "_printHierarchy")

po someView.value(forKey: "recursiveDescription")

Swapping the language to Objective-C removes a lot of the boilerplate typing:

set-lang-objc

po [UIViewController _printHierarchy]

po [someView recursiveDescription]

Calling methods in Swift when you only have a pointer address

Often I don’t have a variable in my current frame as I’m just grabbing memory addresses from other debug print commands or the view debugger (awesome use case).

Once you have a memory address in Swift you have to do the following to call a method:

po unsafeBitCast(0x7fd37e520f30, to: UIView.self).value(forKey: "recursiveDescription")

Changing the language to Objective-C this becomes considerably simpler:

set-lang-objc

po [0x7fd37e520f30 recursiveDescription]

One more thing…

In addition to the two aliases above I’ve had the following alias (that came from StackOverflow at some point) in my tool bag for a long time.

It’s really helpful for single shot commands where I want to evaluate Objective-C without changing the language for subsequent commands.

Back in my ~/.lldbinit I have:

command alias eco expression -l objective-c -o --

Which allows me to run a single command in Objective-C whilst the language is currently set to Swift

eco [0x7fd37e520f30 recursiveDescription]

17 Apr 2020

I was pairing on a task and my colleague watched me repeatedly call the same git commands over and over in the terminal.

I’d gotten into such a rhythm of calling the same sequence of commands that I hadn’t thought to codify it into one step.

My colleague called me on it so now I’m writing a mini post in the hope it will force me to learn the lesson and share a helpful command.

The process I was repeating was for tidying up my local git repo when work had been merged on the remote.

I was following a two step process of pruning remote branches and then removing local branches that have been merged.

The second part is more complex so let’s break that down first.

Removing local branches

We need to start by getting a list of branches that are merged:

➜ git branch --merged

bug/fix-text-encoding

feature/add-readme

master

* development

The output above shows that there are some branches that we probably don’t want to delete.

master is our default branch so we want to keep that around and the branch marked with * is our current branch, which we also want to keep.

We can protect these two branches by filtering our list:

➜ git branch --merged | egrep --invert-match "(\*|master)"

The grep here is inverted so it will only let lines through that do not match our regular expression.

In this case our regular expression matches the literal master or any line starting with an asterisk.

The final thing to do with this filtered list is to delete these branches.

For this we pipe the output into git branch --delete --force:

➜ git branch --merged | egrep --invert-match "(\*|master)" | xargs git branch --delete --force

Removing references to remote branches

This is much simpler and can be achieved with:

Tying the two things together we get a final command of:

➜ git fetch --prune && git branch --merged | egrep --invert-match "(\*|master)" | xargs git branch --delete --force

Giving it a name

The above is quite a long command and although you can use your command line history to find it, we can do better.

I chose the name git delete-merged for my command, which can be achieved by adding an alias to my global gitconfig file:

➜ git config --global alias.delete-merged "\!sh -c 'git fetch --prune && git branch --merged | egrep --invert-match \"(\*|master)\" | xargs git branch --delete --force'"

With this in place I can now just call:

17 Jun 2019

You can learn a lot by exploring an application’s ipa.

Details like how it’s built, how it tracks its users and whether it has any obvious security vulnerabilities.

This is all good information to know if you are looking to take a new job where they have existing apps.

In this post I’m going to show how you can get some insights into existing apps without having to venture into jailbreaking.

Getting an ipa from the App Store

This is annoyingly fiddly but can be done like this:

- Download the app you want to investigate onto your device.

- Install

Apple Configurator 2 from the app store and launch it.

- Sign into your Apple account in

Apple Configurator 2.

- Connect a device.

- Right click on your device and select

Add > Apps....

- Select the app you want to investigate and click

Add.

- After some time you should be presented with an error dialog along the lines of:

The app named "Some App" already exists on "Your iPhone".

- Use terminal (or navigate manually) to find the downloaded file in the following directory:

open ~/Library/Group\ Containers/K36BKF7T3D.group.com.apple.configurator/Library/Caches/Assets/TemporaryItems/MobileApps

- Locate the

ipa for the app you are interested in and copy it somewhere else.

NB: You must copy before accepting the error dialog or the ipa will be deleted.

Preparation

Now you have the ipa you can start to explore. Start by renaming the .ipa suffix to .zip and then double click the file to unzip.

Inside the zip you should find a file with a .app suffix, go ahead and remove the suffix to make exploration a little simpler.

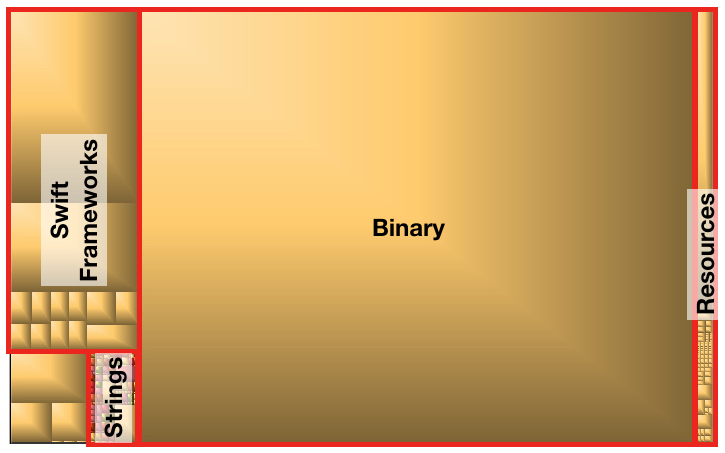

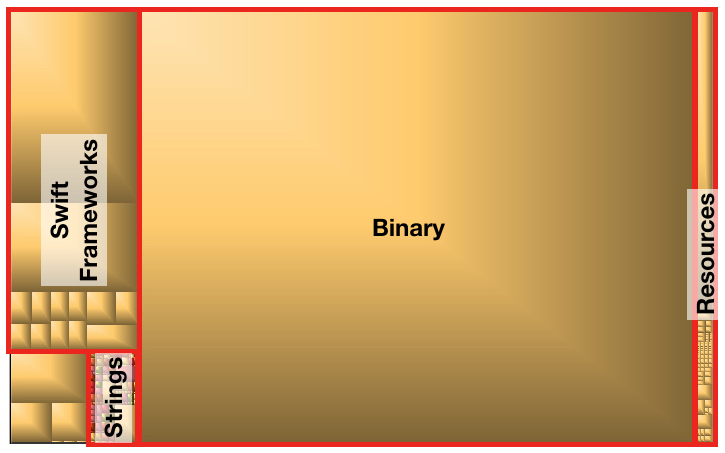

To help visualise the structure of an app I recommend using a tool like Grand Perspective, which will generate an interactive map of the used disk space.

Here’s what the diagram looks like for VLC for iOS:

Investigation

Now it’s just a case of poking around and seeing what is there.

If you are exploring in preparation for an interview, then you should really be taking note of what you find and considering if you need to do any research into these things.

Here’s some stuff that you might see and what it might mean:

*.car files

Indicates that this app uses Asset catalogues - these have been around for a while now but to brush up here’s the reference.

Frameworks directory

You can learn a lot about how an app is built by examining the contents of this folder.

Questions to ask in here are:

- Are there too many dependencies?

- Are dependencies well maintained?

- The frameworks generally show their version information in their plist.

- This can be worrying especially if you can see a framework version being used that is known to be vulnerable.

- Are there creepy analytics SDKs?

- Is the app built using some Swift? The presence of libraries of the format

libSwift* indicates Swift is used.

Info.plist file

The Info.plist file contains all kinds of useful information:

It’s always worth looking in this file as it defines a lot of the app’s capabilities - here’s the reference.

It’s also an easy place for developers to dump information (sometimes incorrectly) to use within the app, so there could be some secrets being exposed.

*.lproj directories

These folders are present in localised apps - the more folders there are the more territories that the app has been localised to.

If you want to read the contents of the *.strings files within these directories then go ahead and rename the extension from .strings to .plist.

*.momd directories

This directory shows that the app is using CoreData - here’s the reference.

To get a sense of what data is being stored you can peek in the *.mom files by renaming the the extension from .mom to .plist.

*.nib or *.storyboardc files

Not everyone is a fan of using interface builder so seeing a load of these in the ipa will either raise red flags or finally give you a reason to conquer that fear and give them another chance.

Settings.bundle directory

You can explore this by right clicking the file and selecting Show Package Contents.

Here’s the reference for settings bundles.

Dynamic Analysis

Doing the above is great for getting a look at how the app might be built but it’s also worth looking at how the app runs.

This is where I would be loading up Charles Proxy on my device and running the app to see what network requests are being made.

Once you have some network data you can ask questions like:

- Are they using

https?

- Do the API requests being made look reasonable?

- Is the app quite chatty on the network?

- Are there any security issues or data being leaked within the requests?

Conclusion

You don’t have to go straight to jailbreaking devices to get a rough idea of how an app is built.

I personally find this exploration interesting and really helpful when looking at potential career moves e.g. doing a bit of due diligence to make sure I’m not stepping into a burning app.