Wrapping a difficult dependency

18 May 2021Most of us have to deal with integrating 3rd party APIs into our apps for a multitude of reasons. Wisdom tells us to wrap dependencies and make them testable but a lot of vendors unintentionally make this really difficult. In this post we are going to look at an API that has several design flaws that make it awkward to isolate and then we will run through the various techniques to tame the dependency.

Let’s start by looking at a stripped down example that shows a few of the issues

public class ThirdParty {

public static var delegate: ThirdPartyDelegate?

public static func loadFeedbackForm(_ id: String) { /* ... */ }

}

public protocol ThirdPartyDelegate {

func formDidload(_ viewController: UIViewController)

func formDidFailLoading(error: ThirdPartyError)

}

public struct ThirdPartyError: Error {

public let description: String

}

The design decisions that the third party author has made make this tricky to work with for a few reasons:

delegateandloadFeedbackFormare bothstaticmaking this a big ol’ blob of global state- It’s using a delegate pattern, which is a bit old school so we’ll want to convert to a callback based API

ThirdPartyErrorhas nopublic initso we can’t create an instance our self for our tests- The delegate callbacks don’t give any indication of what ID they are being invoked for

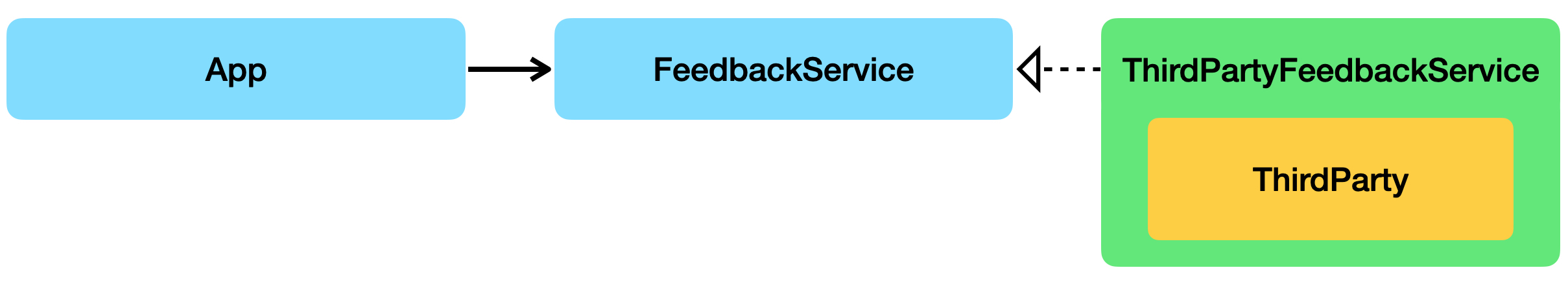

Figuring out the high level plan

We don’t want our application to use ThirdParty directly as it ties us to the 3rd party API design and we can’t really test effectively.

What we can do is create a protocol for how we want the API to look and then make a concrete implementation of this protocol for wrapping the dependency.

The high level view will look like this:

A reasonable API might look like this:

protocol FeedbackService {

func load(_ id: String, completion: @escaping (Result<UIViewController, Error>) -> Void) throws

}

With this set up our tests can verify the App behaviour by substituting a test double that implements FeedbackService so that we don’t hit the real ThirdParty implementation.

These tests are not particularly interesting to look at as creating a class MockFeedbackService: FeedbackService is fairly easy.

However it is interesting to delve in to how to wrap and verify the ThirdParty dependency itself with all its design warts.

Wrapping the dependency

The first design pain is that delegate is a static var and the delegate callbacks do not return any identifier to differentiate multiple loadFeedbackForm calls.

From this we can take the decision to make it an error to have more than one loadFeedbackForm call in flight at any one time.

We can use the fact that if the delegate is non-nil then we must already have an inflight request.

To write a test to cover this we can create an instance of ThirdPartyFeedbackService and provide a stubbed function for fetching the delegate, the production code (see below the test) will default this function to proxying to ThirdParty.delegate.

Hardcoding this getDelegate function to return a non-nil value means that any subsequent call to load should throw an exception:

func testWhenLoadIsInFlight_subsequentLoadsWillThrow() throws {

let subject = ThirdPartyFeedbackService(getDelegate: { ThirdPartyFeedbackService() })

XCTAssertThrowsError(try subject.load("test", completion: { _ in })) { error in

XCTAssertTrue(error is ThirdPartyFeedbackService.MultipleFormLoadError)

}

}

The production code to make this pass would look like this

public class ThirdPartyFeedbackService: FeedbackService {

private let getDelegate: () -> ThirdPartyDelegate?

public init(getDelegate: @escaping () -> ThirdPartyDelegate? = { ThirdParty.delegate }) {

self.getDelegate = getDelegate

}

public func load(_ id: String, completion: @escaping (Result<UIViewController, Error>) -> Void) throws {

guard getDelegate() == nil else {

throw MultipleFormLoadError()

}

}

public struct MultipleFormLoadError: Error {}

}

extension ThirdPartyFeedbackService: ThirdPartyDelegate {

public func formDidload(_ viewController: UIViewController) {}

public func formDidFailLoading(error: ThirdPartyError) {}

}

The next thing to verify is that a normal load call correctly sets the delegate and also that we pass along the right id to the underlying ThirdParty dependency.

Again we don’t want to actually invoke ThirdParty so we need to provide stubs for loadFeedback and setDelegate that would be set to the production in normal usage.

A test to exercise this might look like this:

func testLoadSetsDelegateAndInvokesLoad() throws {

// Given

var capturedFormID: String?

var capturedDelegate: ThirdPartyDelegate?

let subject = ThirdPartyFeedbackService(

loadFeedback: { capturedFormID = $0 },

getDelegate: { nil },

setDelegate: { capturedDelegate = $0 }

)

// When

try subject.load("some-id") { _ in }

// Then

XCTAssertEqual("some-id", capturedFormID)

XCTAssertTrue(subject === capturedDelegate)

}

To make this pass we can update the production code like this:

public class ThirdPartyFeedbackService: FeedbackService {

+ private let loadFeedback: (String) -> Void

private let getDelegate: () -> ThirdPartyDelegate?

+ private let setDelegate: (ThirdPartyDelegate?) -> Void

public init(

+ loadFeedback: @escaping (String) -> () = ThirdParty.loadFeedbackForm,

getDelegate: @escaping () -> ThirdPartyDelegate? = { ThirdParty.delegate },

+ setDelegate: @escaping (ThirdPartyDelegate?) -> Void = { ThirdParty.delegate = $0 }

) {

+ self.loadFeedback = loadFeedback

self.getDelegate = getDelegate

+ self.setDelegate = setDelegate

}

public func load(_ id: String, completion: @escaping (Result<UIViewController, Error>) -> Void) throws {

guard getDelegate() == nil else {

throw MultipleFormLoadError()

}

+ setDelegate(self)

+ loadFeedback(id)

}

public struct MultipleFormLoadError: Error {}

}

extension ThirdPartyFeedbackService: ThirdPartyDelegate {

public func formDidload(_ viewController: UIViewController) {}

public func formDidFailLoading(error: ThirdPartyError) {}

}

With the above modifications we know we are setting things up correctly so the next step is to verify that a successful load will invoke our completion handler. This test will exercise this for us:

func testWhenLoadIsSuccessful_itInvokesTheCompletionWithTheLoadedViewController() throws {

// Given

var capturedDelegate: ThirdPartyDelegate?

let subject = ThirdPartyFeedbackService(

loadFeedback: { _ in },

getDelegate: { nil },

setDelegate: { capturedDelegate = $0 }

)

let viewControllerToPresent = UIViewController()

var capturedViewController: UIViewController?

try subject.load("some-id") { result in

if case let .success(viewController) = result {

capturedViewController = viewController

}

}

// When

subject.formDidload(viewControllerToPresent)

// Then

XCTAssertEqual(viewControllerToPresent, capturedViewController)

XCTAssertNil(capturedDelegate)

}

This change makes the test pass:

public class ThirdPartyFeedbackService: FeedbackService {

+ private var completion: ((Result<UIViewController, Error>) -> Void)?

private let loadFeedback: (String) -> Void

private let getDelegate: () -> ThirdPartyDelegate?

private let setDelegate: (ThirdPartyDelegate?) -> Void

public init(

loadFeedback: @escaping (String) -> () = ThirdParty.loadFeedbackForm,

getDelegate: @escaping () -> ThirdPartyDelegate? = { ThirdParty.delegate },

setDelegate: @escaping (ThirdPartyDelegate?) -> Void = { ThirdParty.delegate = $0 }

) {

self.loadFeedback = loadFeedback

self.getDelegate = getDelegate

self.setDelegate = setDelegate

}

public func load(_ id: String, completion: @escaping (Result<UIViewController, Error>) -> Void) throws {

guard getDelegate() == nil else {

throw MultipleFormLoadError()

}

+ self.completion = { [setDelegate] result in

+ setDelegate(nil)

+ completion(result)

+ }

setDelegate(self)

loadFeedback(id)

}

public struct MultipleFormLoadError: Error {}

}

extension ThirdPartyFeedbackService: ThirdPartyDelegate {

public func formDidload(_ viewController: UIViewController) {

+ completion?(.success(viewController))

}

public func formDidFailLoading(error: ThirdPartyError) {}

}

Finally we need to handle the failing case:

func testWhenLoadFails_itInvokesTheCompletionWithAnError() throws {

// Given

var capturedDelegate: ThirdPartyDelegate?

let subject = ThirdPartyFeedbackService(loadFeedback: { _ in }, getDelegate: { nil }, setDelegate: { capturedDelegate = $0 })

let errorToPresent = NSError(domain: "test", code: 999, userInfo: nil)

var capturedError: NSError?

try subject.load("some-id") { result in

if case let .failure(error) = result {

capturedError = error as NSError

}

}

// When

subject.formDidFailLoading(error: NSError(domain: "test", code: 999, userInfo: nil))

// Then

XCTAssertEqual(errorToPresent, capturedError)

XCTAssertNil(capturedDelegate)

}

This requires a fairly complex change because we can’t create a ThirdPartyError because the init is not public.

Instead we need to work around this by making our function generic so that the compiler will write one implementation that works for ThirdPartyError types and one implementation that works with the Error type we provide in our tests.

public class ThirdPartyFeedbackService: FeedbackService {

private var completion: ((Result<UIViewController, Error>) -> Void)?

private let loadFeedback: (String) -> Void

private let getDelegate: () -> ThirdPartyDelegate?

private let setDelegate: (ThirdPartyDelegate?) -> Void

public init(

loadFeedback: @escaping (String) -> () = ThirdParty.loadFeedbackForm,

getDelegate: @escaping () -> ThirdPartyDelegate? = { ThirdParty.delegate },

setDelegate: @escaping (ThirdPartyDelegate?) -> Void = { ThirdParty.delegate = $0 }

) {

self.loadFeedback = loadFeedback

self.getDelegate = getDelegate

self.setDelegate = setDelegate

}

public func load(_ id: String, completion: @escaping (Result<UIViewController, Error>) -> Void) throws {

guard getDelegate() == nil else {

throw MultipleFormLoadError()

}

self.completion = { [setDelegate] result in

setDelegate(nil)

completion(result)

}

setDelegate(self)

loadFeedback(id)

}

public struct MultipleFormLoadError: Error {}

}

extension ThirdPartyFeedbackService: ThirdPartyDelegate {

public func formDidload(_ viewController: UIViewController) {

completion?(.success(viewController))

}

- public func formDidFailLoading(error: ThirdPartyError) {

+ public func formDidFailLoading<T: Error>(error: T) {

+ completion?(.failure(error))

}

}

How’d we do?

The design issues raised at the beginning made it harder to wrap the dependency and we had to make some decisions along the way like how we fail if multiple loads are called. We had to get a little creative to make this work and things like thread safety and cancellation are all things omitted for brevity.

Just for kicks

We might look at our protocol and think that it’s not really a useful abstraction to have. Migrating to using a simple value type is fairly mechanical and worth having a look to see how it pans out.

public struct FeedbackService {

public let load: (_ form: String, _ completion: @escaping (Result<UIViewController, Error>) -> Void) throws -> Void

}

extension FeedbackService {

public static func thirdParty(

loadFeedback: @escaping (String) -> () = ThirdParty.loadFeedbackForm,

getDelegate: @escaping () -> ThirdPartyDelegate? = { ThirdParty.delegate },

setDelegate: @escaping (ThirdPartyDelegate?) -> Void = { ThirdParty.delegate = $0 },

makeDelegate: () -> Delegate = Delegate.init

) -> FeedbackService {

let delegate = makeDelegate()

return .init { id, completion in

guard getDelegate() == nil else {

throw MultipleFormLoadError()

}

delegate.completion = { result in

setDelegate(nil)

completion(result)

}

setDelegate(delegate)

loadFeedback(id)

}

}

public class Delegate: ThirdPartyDelegate {

var completion: ((Result<UIViewController, Error>) -> Void)?

public init() {}

public func formDidload(_ viewController: UIViewController) {

completion?(.success(viewController))

}

public func formDidFailLoading<T: Error>(error: T) {

completion?(.failure(error))

}

}

public struct MultipleFormLoadError: Error {}

}

Looking at the above - the core of the logic does not change at all.

We lose some lines as we are capturing the params to the thirdParty function and don’t need to create instance variables.

We gain some lines by implementing an inline delegate class.

The tests can also be updated with minimal changes:

final class ThirdPartyFeedbackServiceTests: XCTestCase {

func testLoadSetsDelegateAndInvokesLoad() throws {

// Given

let delegate = FeedbackService.Delegate()

var capturedFormID: String?

var capturedDelegate: ThirdPartyDelegate?

let subject = FeedbackService.thirdParty(

loadFeedback: { capturedFormID = $0 },

getDelegate: { nil },

setDelegate: { capturedDelegate = $0 },

makeDelegate: { delegate }

)

// When

try subject.load("some-id") { _ in }

// Then

XCTAssertEqual("some-id", capturedFormID)

XCTAssertTrue(delegate === capturedDelegate)

}

func testWhenLoadIsInFlight_subsequentLoadsWillThrow() throws {

let subject = FeedbackService.thirdParty(getDelegate: { FeedbackService.Delegate() })

XCTAssertThrowsError(try subject.load("test", { _ in })) { error in

XCTAssertTrue(error is FeedbackService.MultipleFormLoadError)

}

}

func testWhenLoadIsSuccessful_itInvokesTheCompletionWithTheLoadedViewController() throws {

// Given

let delegate = FeedbackService.Delegate()

var capturedDelegate: ThirdPartyDelegate?

let subject = FeedbackService.thirdParty(loadFeedback: { _ in }, getDelegate: { nil }, setDelegate: { capturedDelegate = $0 }, makeDelegate: { delegate })

let viewControllerToPresent = UIViewController()

var capturedViewController: UIViewController?

try subject.load("some-id") { result in

if case let .success(viewController) = result {

capturedViewController = viewController

}

}

// When

delegate.formDidload(viewControllerToPresent)

// Then

XCTAssertEqual(viewControllerToPresent, capturedViewController)

XCTAssertNil(capturedDelegate)

}

func testWhenLoadFails_itInvokesTheCompletionWithAnError() throws {

// Given

let delegate = FeedbackService.Delegate()

var capturedDelegate: ThirdPartyDelegate?

let subject = FeedbackService.thirdParty(loadFeedback: { _ in }, getDelegate: { nil }, setDelegate: { capturedDelegate = $0 }, makeDelegate: { delegate })

let errorToPresent = NSError(domain: "test", code: 999, userInfo: nil)

var capturedError: NSError?

try subject.load("some-id") { result in

if case let .failure(error) = result {

capturedError = error as NSError

}

}

// When

delegate.formDidFailLoading(error: NSError(domain: "test", code: 999, userInfo: nil))

// Then

XCTAssertEqual(errorToPresent, capturedError)

XCTAssertNil(capturedDelegate)

}

}

Conclusion

I quite like the second version and think it was worth taking the extra time to explore both approaches even if it boils down to personal preference. It would be nice if third party vendors made testing a first class concern rather than leaving us scratching our heads for work arounds or just accepting that we will call the live code. Hopefully some of the techniques/ideas are useful and no doubt I’ll find myself reading this post in a few months times when I have to wrap something else awkward.